A while ago, I decided to learn Rust and thought it would be a great opportunity to combine it with my second biggest interest besides coding: sports! I came up with the idea of displaying all my Strava activities on a single map. The purpose was to visualize all the tracks I've been on, allowing me to identify unexplored areas and focus on reaching them in the future, particularly for cycling.

I knew that some processing would be required on this data so I sold the idea to myself that Rust would be a good fit for such a task. Coming from a background in C and C++ where my mind got accustomed to thinking in bits, I also told myself that this would be a good opportunity to learn 'big data' as my sports activities are the most amount of data I've gotten my hands on so far.

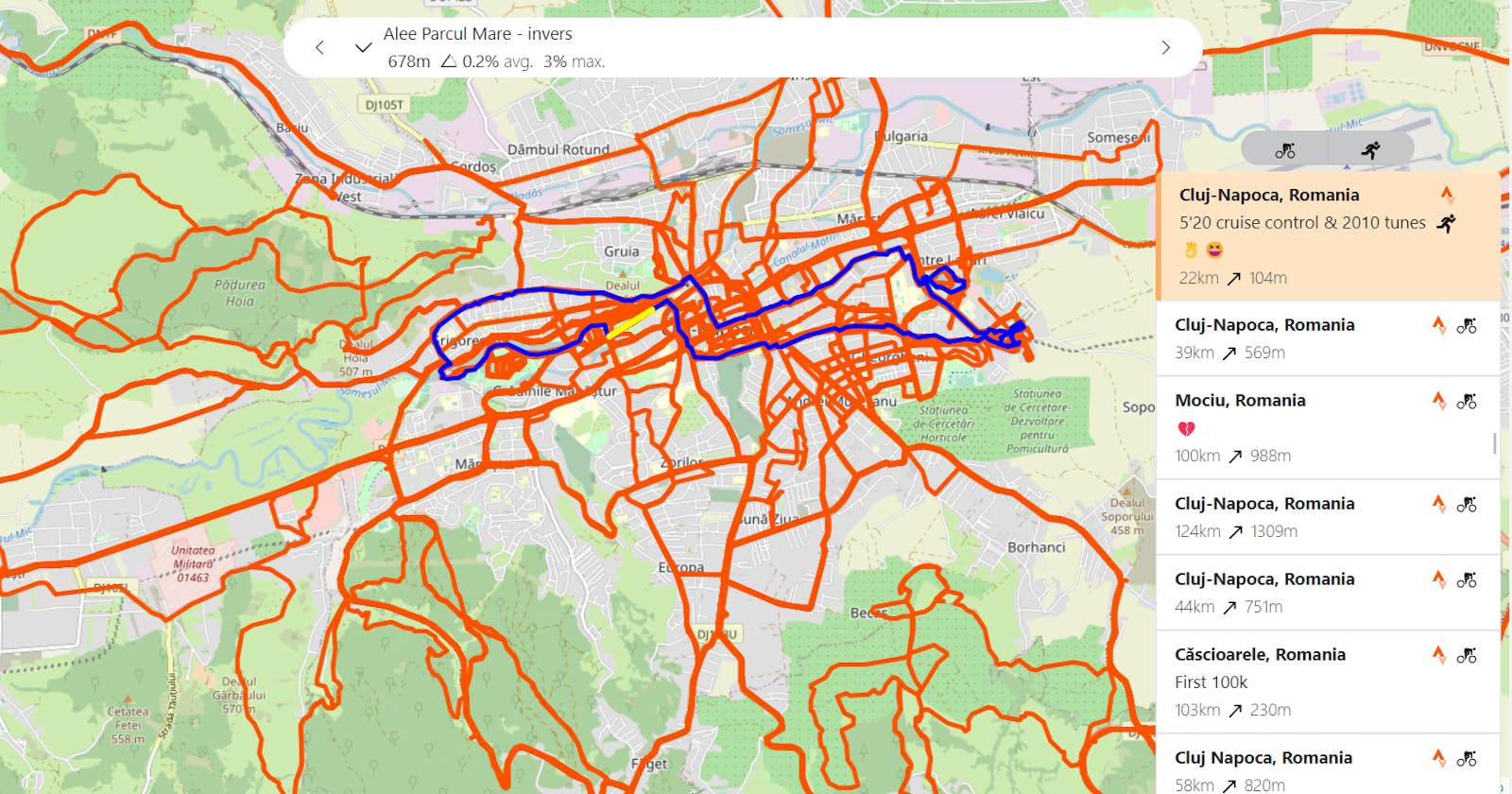

Before diving into the details, here is the app I've managed to build. I called it "The World Covered" because my intention with it was to see how much of the world I've covered so far by cycling. It's a good thing I did because I saw how little I actually did.

The first steps I took were to figure out how to download all my activities from Strava and to see if displaying them on a single map (through a web app) would crash the webpage or make it very sluggish so let's see how I tackled these two topics.

If you are curious about the code, you can find it all in the repos below:

Backend ▶️ https://github.com/24rush/the-world-covered

Frontend ▶️ https://github.com/24rush/the-world-covered-web

Handling the data

Strava API

All my activities are stored in Strava so naturally, I had to figure out how to get them out of there. Conveniently, Strava offers an API for such tasks, the only problem was that I wanted to do it from Rust which was something not that well documented.

Get the breakdown of how I accessed Strava's API from Rust in this separate article below:

MongoDB

Once I had my data source set up, I went on to figuring how to persist it. After an unfortunate attempt of putting the data into a Redis database, I moved to a more appropriate MongoDB instance.

I had used MongoDB some years ago and didn't think much of it at that time but man was I impressed this time around! The richness of features and ease of use in all API realms (server side, web clients, Atlas web portal, tools, etc.) is second to none. Hats off to them! Not giving them ideas but this is one of the few services I would happily pay money for - it so too happens that they have a very good free tier so I didn't have to do it.

Get the breakdown of how I used Rust to store the Strava data in MongoDB in this separate article below:

I also knew that the web app would be using MongoDB's WebSDK for retrieving the data in production but for development purposes, I wanted to use data from a local MongoDB database so I had to create a Rust app that would act as my MongoDB stub during development (more details on this later on in the article). As MongoDB queries are plain JSONs, my Rust server would just need to handle these JSONs by running them against the local mongod instance and return the documents to the client as JSONs as well.

A quick design diagram can be seen below:

You can see in the gist below how the switching between the two of them was implemented. Spoiler alert: it uses an interface :)

Worth noting the amazingness of MongoDB's WebSDK which with around 5 lines of code, allows you to read from the cloud database. Lines 17-19 specify the application ID you want to connect to and your credentials, lines 24-25 issue the request for data.

Handling the map

Now that I had my data stored locally, I could start thinking about how to visualize it in a web app. At first, all I wanted was to try to display as many of them on a single map and see how the browser behaves. I didn't know the amount of data required but not seeing many apps like that in the wild, I was concerned that it might be for a reason.

Leaflet.js

One of the best decisions I've made at this point was to use Leaflet.js for handling the OSM maps in the browser.

What is Leaflet.js

Luckily, both Leaflet and Strava API use Google Encoded polylines for describing GPS tracks so I could directly use the data coming in from Strava to display it on the map. What I created initially was just a wrapper over Leaflet.js which allowed me to:

display polyline (GPS track of the activity) on the map

highlight polyline when hovering it

show/hide all polylines

get notified when the user clicked on a polyline

center the map, pan to a GPS location, perform zoom at a particular GPS position

This resulted in the: src / leaflet / LeafletMap class.

Having these pieces together resulted in a web app that could display the routes I've been on during my activities. What I could immediately find out is that if I were to request the data for displaying all my activities in one go, it would amount to around 6MB of data which did not create the best user experience during its retrieval but once it was loaded onto the map, the page was smooth - which was a huge relief. I will later discuss how I optimized this step by using a worker thread and also an incremental approach to downloading the activities.

Having fewer concerns about the feasibility of the app, I went on to think about its main features and put in place an overall architecture. I also researched where I could host it (the cheapest) and how I would get around the security issues of it being mostly a serverless app.

Let me first state the list of features I came up with:

Display the complete list of tracks I've been on (while running, cycling, etc.) on the map

Merge all my tracks into unique ones as I usually take the same track multiple times

Extract meaningful information from:

the telemetry data (altitude, distance, time, GPS coordinate, etc) like longest climbs or descents, fastest 12 min runs (for Cooper test), etc.

the activities themselves like the number of km per year, number of hours, days I've ridden most often, etc.

Query the data using natural language (with the help of OpenAI's SDK)

Extract even more meaningful information from the data by using ML models

Architecture

Considering the requirements above, the overall architecture diagram of the project looked like this:

Rust backend (data pipeline) 1

This is the main chunk of the app and the reason I created this project - to learn Rust. It contains the processing pipeline for the Strava data and the handling of MongoDB/

The details of how I processed all this data can be read in detail in the article below:

and the way I hosted my Rust backend in GCP can be read below:

MongoDB databases 2, 5

You might have spotted that there are two instances of MongoDB in the project. I did it like that because I wanted to have a local instance of the data so I could manipulate it easier & faster plus I didn't want to use up my MongoDB free-tier quota on development.

There are two databases to work with, one (strava_db) for mostly static (read-only) data coming in through the Strava API and another database (gc_db) for the data I am creating through the Rust pipeline (unique activities, statistics, etc.).

The content of the databases is as follows:

| strava_db collections | gc_db collections | ||

| Athletes | data about the athlete we are storing activities for | Routes | all the activities merged into unique ones based on their GPS tracks |

| Activities | the complete set of activities for the athletes | Statistics | different statistics on the data |

| Telemetry | telemetry data for each activity (GPS points, altitude data, etc.) |

To synchronize the local databases with the cloud ones, I am using two very nifty tools that MongoDB provides and these are: mongodump & mongorestore. The first one dumps all the data from your mongod instance into a local folder and the second one restores the target database (in my case the cloud database) with the data found in this folder.

The sync procedure looks like this:

mongodump

mongorestore --uri mongodb+srv://<username>:<password>@<db_url> --nsInclude=strava_db.activities

where db_url is the URL of the cloud MongoDB and the value of --nsInclude parameter is the collection I want to synchronize.

The sync procedure needs to be run in a few instances:

initially when the remote database is created

every time a new activity comes in from Strava API

Rust local server 3

Because of the local database I am using, I had to provide a data endpoint to the web app during development and this is where the Rust local server comes into play. This is a plain Rocket.rs web server that listens for incoming query requests from the local web app and returns the corresponding JSON data from the database.

CORS & Fairings

If there is someone to spoil a party, that is CORS and obviously, it had to spoil mine. Luckily, Rocket.rs had all the pieces to make this fix a breeze at least if you knew what you had to do and in my case, it was to implement a CORS fairing for all my responses that would inform the browser that, as a server, I'm allowing requests from localhost:5173 which is the address of my Vite local webserver hosting the development web app.

In line 28 you can see how the fairing is attached to the rocket instance. Moreover, in line 6, I tell Rocket that this fairing is for Response which will make it call my on_response method after each request is handled but just before passing it back to the client. This way, when the browser issues the pre-flight OPTIONS request, I can attach the Access-Control-Allow-XYZ response headers required for CORS. Without these headers, the browsers just block the request.

Data requests

After handling CORS, the actual request are handled like so:

My web app, through the mechanism I mentioned earlier in the article, issues a POST request to localhost:8000 which contains a preformatted JSON query to be issued to MongoDB. The Rust local server then parses this JSON string into the bson format needed by the Rust mongo driver and proceeds to run it against the local database. The response is then formatted as JSON and sent back to the client.

This is how in the development environment I can use the local mongo database and in production the cloud version. I later figured that through this setup I could also target the remote database by changing the connection string the Rust mongo driver is using. Double win.

Web application 4

As I am a bit of a fanboy of Typescript, I had to use it to build the web app and because I also had some experience with Vue.js, I went ahead with the two of them plus Vite as my bundler. This is another instance where I was pleasantly surprised at how good these frameworks have become. It seems the web ecosystem is settling on some standards which are getting more reliable and polished as time passes.

I am not going to go into much detail on the web part because it's the usual Vue.Js & Typescript website but I want to touch on how I managed to make it work smoothly considering the amount of data required to display.

The challenge was the main page which had to contain all my unique (deduplicated) routes - 320 of them and worth around 6MB of data. Downloading them in one go was not feasible and also not needed as the viewport doesn't even contain them when the page first loads.

The solution came in two parts:

using a worker thread for requesting & processing any data from the database

Worker threads in web applications have become the standard nowadays so no need to explain how they work. In my app, the implementation consists of two files:

▶️ src / webworker_handler.ts containing the 'frontend' for the thread and the class that the rest of the application is using when needing data. Its job is to register the data requests, spawn them to the thread using postMessage and then proxy back the results incrementally to their corresponding requesters as they are processed by the thread.

%[gist.github.com/24rush/9388a52ff4ae9bfe0724..

▶️ src / worker.js containing the actual thread function which has the responsibility of taking the query and calling the data endpoint with it. After the result is received, it does post-processing on each item (track) and calls the requester back with the outcome. This way, the resulting activities are being returned item by item, not all in a bunch.

requesting activities incrementally as the user panned/zoomed the map and they came into view

This was accomplished by doing some server-side processing on the activities and determining their distance in intervals of 100km to the capital city (Bucharest). Therefore, every activity has a number allocated: 0 if it's less than 100km away from Bucharest, 1 if it's between 100 and 200km away and so on. The blue circles represent these regions and you can see that for the first ring, there aren't that many activities (around 170kb of data actually if the viewport can only display a corner that is less than 100km away from Bucharest).

As the user pans/zooms the map, I get notified of a new bounding box of the map and for each, I compute the distance of the NW corner to the capital city. If it's more than the last ring I retrieved, I make a call for the activities on the next ring.

%[gist.github.com/24rush/340eecf08172e3955783..

This is how I managed to go from 6MB of data to 170kb and make the page move smoothly. I got to tell you that I find it quite mesmerizing how the page keeps popping new routes as I move it around - I guess it's also because it brings back memories from those places 😊

Hosting & Security

From the beginning, I wanted the website to offer the possibility of searching through the activities using natural language. After doing some research I concluded that I could use OpenAI's SQL Translate API but this requires an api_key that is recommended to be kept private as it's associated with your account's quota so anyone that has it could potentially eat into your (paid) quota. This meant that if you wanted to safely benefit from this feature, you would need a server component that would hide your API key so the clients would just call your public functions exposed by this server which then internally would use this api_key to query OpenAI.

Till this point, I've managed to keep everything serverless, meaning that all my code was meant to be run on the client but with the introduction of this constraint from OpenAI's api_key, I had to improvise. I didn't have a particular reason for keeping everything on the client side just that I didn't want to pay for hosting and also I was not particularly keen on delving into these technologies as they were not the focus of this project.

Vercel 6

This would be around the third instance where I was to be extremely impressed by how good some technologies have become - at the same level as I've been impressed by MongoDB.

What is Vercel?

Initially, I wanted to use Vercel just for API hosting meaning that I wanted it to be a proxy between the web page and Open AI's SDK so I could hide my api_key but after some investigation, I found out that it could also host my web application in a very neat setup (before that I was using Github Pages which was an ok way to do it but not even close to how amazing Vercel would prove to be).

Web application hosting

I was mentioning earlier how the industry tends to settle for some web technologies and they become broadly adopted and standardized. This is what Vercel saw as well and it offers out-the-box automatic configuration for deploying web applications based on popular frameworks among which is Vue.js as well.

One other neat aspect of Vercel is that it connects to GitHub and listens for new commits to automatically redeploy your app. After each new commit you push to your repo, Vercel will build your app and redeploy it - all happening in about 15 seconds (for my app). Amazing!

How I configured it is so straightforward that it's not worth writing about but you can find all the details here on Vercel's website.

Having the hosting covered now, I could proceed with the OpenAI proxy part.

Serverless functions

What are Serverless functions?

In a nutshell, this is what the Vercel setup looks like:

As I was mentioning in the previous chapter, the deployment of the app starts with a push in the Github repo which triggers Vercel to build and redeploy the app. The part we are interested in now is related to OpenAI and more precisely, how to call their API when the user triggers a search request from the webpage.

The file below shows the serverless function responsible for calling OpenAI's API using the environment variable process.env.OPENAI_API_KEY (line 32). By using this environment variable which is part of the server's configuration, I could hide it from the client.

This is the thing of beauty #1 but check out #2: the file below is stored in my repo under the /api folder with the name genq.ts (generate query). This instructs Vercel to create a route /api/genq for my domain which when issuing an HTTP request to it, will call the handler (serverless) function listed below 👏

Notice our old friend CORS again but this time around we configure it to allow requests only from my origin (line 3) which is the address of the website.

Having these in place, my web page will now need to issue requests to the-world-covered.vercel.app/api/genq to trigger calls to OpenAI. Notice how the request does not contain any private information, just the search query itself.

I am going into detail on how I integrated Open AI into my project in this separate article below:

This concludes my work thus far on this project; however, there is one more important feature I wish to incorporate as part of my skills development initiative. I aim to use machine learning to extract meaningful information from my data. At the moment, I am researching this topic, but please feel free to share any pointers or ideas in the comments.

Thank you for reading and let's keep in touch ✌️